UiPath’s AI Center Demystified

Understanding the Structure, Flow and Jargon of an AI Center Process

What’s the AI Center For?

UiPath’s AI Center is a way to create, train and deploy custom Machine Learning models for use in automations. Here’s where the datasets are stored, and the training of the model happens. But the AI Center has its own objects and jargon, and it can all get a bit confusing at first. Before we start to use the AI Center, let’s first get an overview of what each term means.

Project

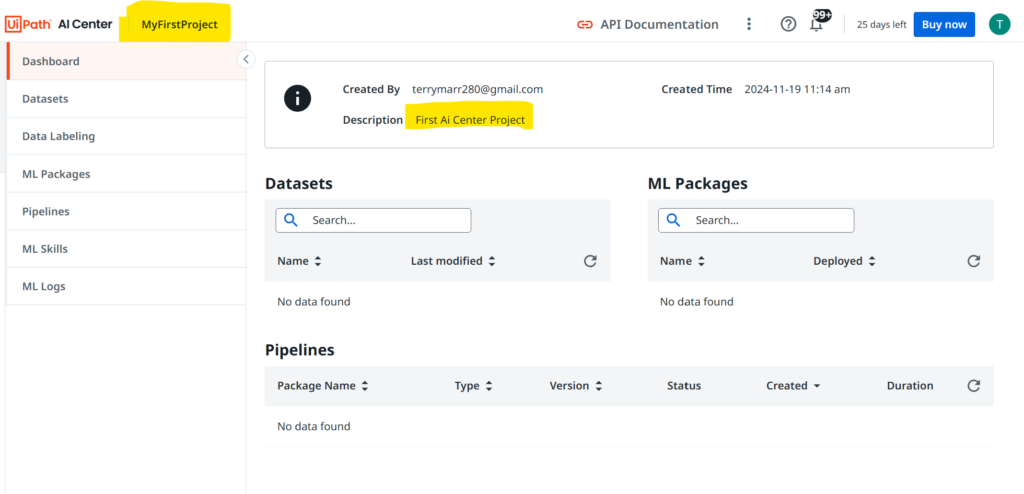

A Project is the top-level organizational container for the model we will build. We give each project a name and an optional description. Once you create a new Project, the Dashboard screen appears:

Notice the left-hand side of the Dashboard. From here, you can access all the other things about the project. Let’s learn what they are.

Datasets

In AI Center, a dataset refers to a folder of some examples that will be used for training or evaluating the model. It is a folder of documents or images, whatever the model is being built to process. We create datasets for training, along with separate datasets for evaluation. At runtime, there is also a way for a robot to upload a file to a dataset. Every file within a folder is considered part of a single dataset.

Data Labeling

For text-based datasets, these are the fields of data that will be extracted from the dataset. From this tab you create a data labeling session and select the dataset you will be working on. A wizard launches which allows you to select the fields and label them. Everything done in this step will be saved back to the dataset. So, Data Labeling isn’t really an object in the project, it’s more of an action you take to modify the dataset.

ML Packages

This is a folder that holds everything it needs to run the process. It holds (or points to) the compiled code that enable the learning and stores the resulting learned model. There are packages available by UiPath, Open-Source packages, or you can import a previously created package for your project. Some models are retrainable, meaning you can use your own dataset, and some are not.

Pipelines

Every time you run an ML Package, it is logged as a pipeline. A pipeline takes inputs, runs its functions, and produces output. Details such as how long it took and its resulting output are stored here. So a pipeline is a specific run of a package.

ML Skills

Once an ML Package has been trained and tested, the resulting learned model can be used in an automation to process real documents. The model is bundled (without its datasets) into something called an ML Skill, which can then be accessed in Studio by a Machine Learning Extractor activity in an automation.

ML Logs

System logs of every event taken in each project can be seen here.

AI Center Objects – A Deeper Dive

Now that we have a high-level understanding, let’s dive a bit deeper into the more complex objects and learn some rules about how they work.

Datasets — Example Files

There are usually 2 folders created in the datasets tab, one for training, and one for evaluation. The general rule of thumb is 80% to train on, 20% to test on. Of course, the testing files must not have been used in training. They must be completely new to the model.

There is a specific way that datasets need to be structured within AI Center. IMPORTANT: Images must be within a sub-folder called “images”. If we are training our model to recognize images of vehicles, for example, our file structure might look like this:

-- training

-- images

-- bus

bua1.jpg

bus2.png

bus3.jpg ...etc

-- van

van1.jpg

van2.png ...etc

-- car

car1.jpg

car2.jpg ...etc

-- evaluation

-- images

-- bus

bua4.jpg

bus5.png

bus6.jpg ...etc

-- van

van4.jpg

van5.png ...etc

-- car

car4.jpg

car5.jpg ...etc

Once you have created these folders with their files on your local drive, you can upload each folder to the datasets section in AI Center. You would upload the “training” folder and the “evaluation” folder separately, thereby creating 2 different datasets.

The name of the directory that holds the files is important. The directory name (car, van, bus) tells the model what to call each file underneath it. It doesn’t matter what the file itself is called, just under what directory it sits.

ML Package — Where the Learning Happens

The ML Package is the the model we are building. This container holds both the package of compiled code (the algorithm) that does the analysis, as well as the resulting lessons it learns. When we create an ML Package, the first step is to choose the package, the algorithm we will use. There are packages developed by UiPath, some open-source packages, or we can import our own. There are a variety of packages each with their own specialty such as classifying images, understanding unstructured text, doing translations, reading signatures, etc. All of the packages are pre-trained, and some allow retraining on your own dataset. Once we choose our package, we give our ML Package a name and save it to our project.

Pipelines — Running a Job

We’ve created our Datasets. We’ve chosen an algorithm to use and saved it in our ML Package. Now it’s time to actually run it and see what happens. In the Pipelines tab, create a pipeline and pass in the arguments:

- the type of pipeline: train, evaluation, full run (run both together)

- the name you want to give this particular run

- the ML Package, created earlier, that you are running

- the latest major & minor version of the package (select minor 0 for training)

- the name of the dataset, created earlier, that you are using

- some packages may require additional arguments as per their documentation

When you click Run Now and Create, the pipeline starts running. Optionally, you can schedule the pipeline to run when you choose. How long the run takes depends on how big your dataset is. When it is finished the Status will show Successful (you may have to refresh your screen). Remember, the pipeline saves everything it learns back into the ML Package. So it’s okay to delete pipelines. If the Status shows Failed, you can click on the pipeline to see the logs to find out what happened. Once you’ve done a training run, an evaluation run will test the package to see how confident it is. Evaluation pipelines return a confidence score between 0 and 1. To increase your evaluation score, make sure your training dataset is of good quality, and large enough.

ML Skills — Deploying to the Tenant

Once we are satisfied with our trained ML Package, in order to use it in an automation, we first need to turn it into something called an ML Skill. This is simple to do under the ML Skills tab. Once created (deployed), it will automatically appear in Studio in the ML Skill dropdown in the ML Skill activity (in the UiPath.MLServices.Activities package). The ML Skill is deployed at the tenant level, and is available to all folders within that tenant.

Summary

You should now have a good understanding of what each tab in the AI Center does and how an AI model is created, evaluated, and deployed. We have learned what the terms mean on each tab, and how they all fit together in creating a deployable ML Skill for use in automations.